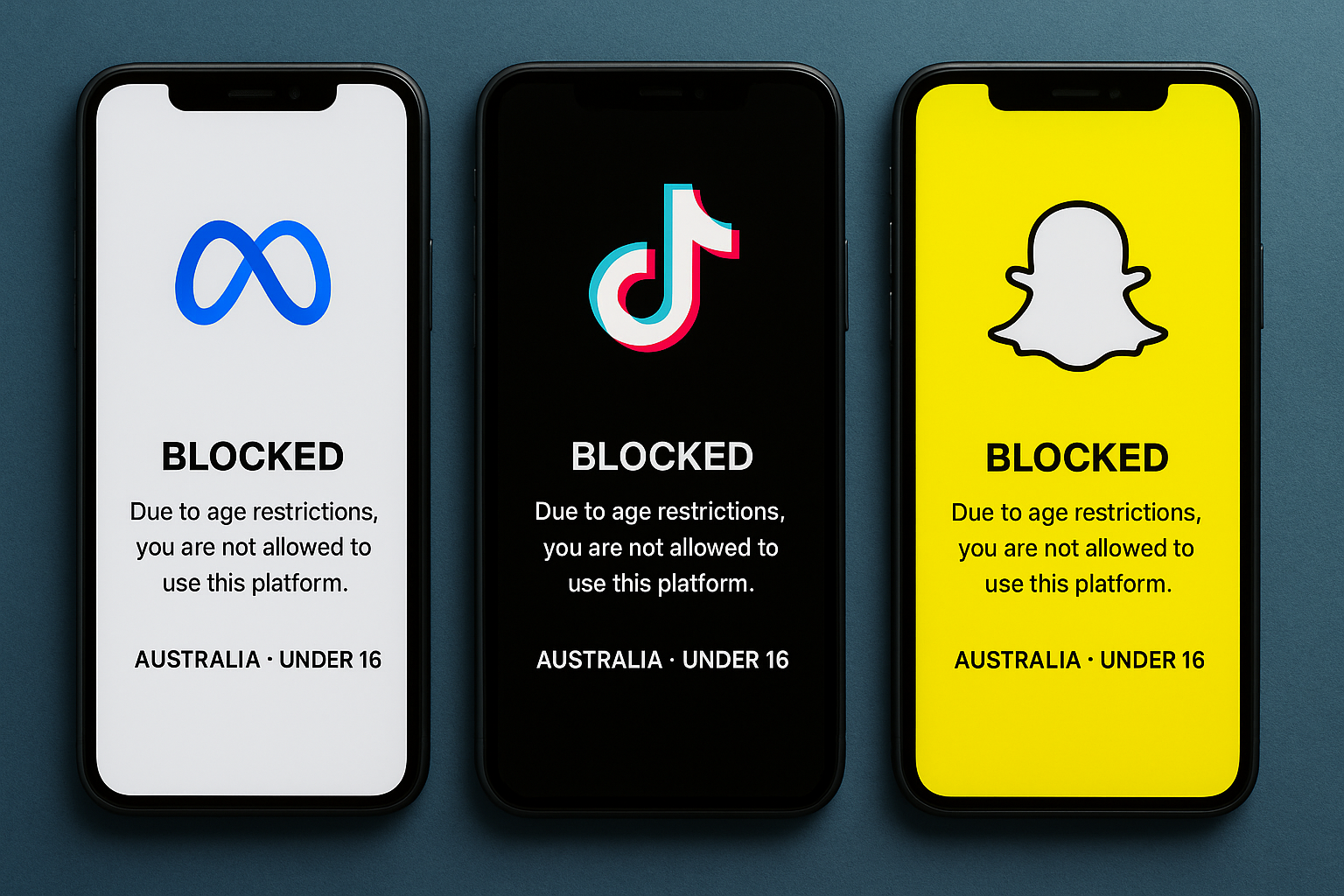

Major social media companies, including Meta, TikTok, and Snapchat, are preparing to comply with Australia’s new law that prohibits users under 16 years old from maintaining social media accounts. The law takes effect on December 10 and will result in the deactivation of over a million accounts belonging to minors.

Platforms will contact affected users, offering them options to download their data or freeze their profiles before deletion. For the rest of Australia’s roughly 20 million social media users, operations are expected to continue without major disruption. Companies are adopting low-profile compliance strategies to avoid penalties of up to 32 million Australian dollars for violations.

After a year of opposition, firms have agreed to implement automated age detection systems already in use for marketing purposes. These systems estimate user age by analysing engagement patterns such as likes and activity frequency. Dedicated age assurance apps will be introduced mainly to resolve disputes from users who believe their accounts were wrongly restricted.

The technology, though established, is being tested at scale for the first time. Trials showed reliable but imperfect accuracy, sometimes flagging 16- or 17-year-olds as underage or mistakenly approving 15-year-olds. Such cases could still expose platforms to financial penalties if minors are not properly blocked.

Julie Dawson, chief policy officer at Yoti, which supplies age assurance tools for several platforms, said the transition period would be brief. “There’ll be a maximum of two to three weeks of people getting to grips with something that they do daily, and then it’s old news,” she said.

Meta, Snapchat, TikTok, and Google declined to comment publicly on implementation details, though all except Google confirmed in parliamentary hearings that they would comply with the law and begin contacting young users.

Blocking minors under the new law

The Australian government introduced the ban following growing concern over social media’s effects on children’s mental health. The move follows leaked internal Meta documents in 2021 that acknowledged such risks, and public debate reignited in 2024 after the release of the book The Anxious Generation.

The legislation requires platforms to block minors directly, removing the need for parental approval or intervention. Its passage followed months of debate between policymakers, free speech advocates, and the technology sector.

TikTok, which reported around 200,000 Australian users aged 13 to 15, told parliament that it was developing a reporting feature to flag underage accounts. Other platforms are expected to rely primarily on their internal algorithms to determine age, with third-party verification required only when disputes arise.

Kick, an Australian-owned livestreaming platform that faced criticism earlier this year after a livestreamed death, is the only domestic company affected by the regulation. A spokesperson said the service “will be compliant” and plans to introduce “a range of measures” to meet legal requirements.

For those disputing age assessments, the new law permits verification through third-party apps. These systems use facial analysis to estimate age, and if results are contested, users can submit official identification for review.

Implementation challenges and age verification limits

Experts warn that age estimation technology remains imperfect, particularly for users aged 16 and 17. Daswin De Silva, a computing professor at La Trobe University, said that “many technological methods of age verification will fail in that narrow band.”

Government data shows that about 600,000 Australians fall within this age group. Many are too young to hold standard identification documents such as driver’s licences, making verification more difficult. The error margin could result in legitimate users being temporarily locked out of their accounts until their age is confirmed through manual review.

Despite these challenges, compliance efforts are moving ahead without major resistance. The government has framed the legislation as a step toward protecting young people online while reducing exposure to harmful content and social pressure.

Technology companies are expected to refine their verification systems over time, though the success of Australia’s rollout will be closely watched internationally. If effective, it could influence similar age-based restrictions in other jurisdictions, considering tighter controls over youth access to social media.